In a Microservices architecture, Services are dynamic, distributed and present in large numbers. It is needed to have a good Service discovery solution to address this dynamic problem. In this blog, I will cover basics of Service discovery and using Consul to do Service discovery.

What is Service discovery?

Service discovery should provide the following:

- Discovery – Services need to discover each other to get IP address and port detail to communicate with other services in the cluster.

- Health check – Only healthy services should participate in handling traffic, unhealthy services need to be dynamically pruned out.

- Load balancing – Traffic destined to a particular service should be dynamically load balanced to all instances providing the particular service.

Following are the critical components of Service discovery:

- Service Registry – Maintains a database of services and provides an external API(HTTP/DNS) to interact. This is typically Implemented as a distributed key, value store.

- Registrator – Registers services dynamically to Service registry by listening to events.

- Health checker – Monitors Service health dynamically and updates Service registry appropriately.

- Load balancer – Distribute traffic destined to service to active participants.

Consul for Service Discovery

There are many possible solutions(etcd, zookeeper, skydns) available for Service discovery. Consul is a comprehensive Service discovery solution that covers all the critical components explained above. Following are some architecture details of Consul:

- Has a distributed key, value(KV) store for storing Service database.

- Provides comprehensive service health checking using both in-built solutions as well as user provided custom solutions.

- Provides REST based HTTP api for interaction.

- Service database can be queried using DNS.

- Does dynamic load balancing.

- Supports single data center and can be scaled to support multiple data centers.

- Integrates well with Docker.

Consul installation

Consul can be installed in standalone mode or in a cluster of servers. For development purpose, we can use a single cluster node. For production purposes, it is necessary to have a multi-node Consul cluster to have built-in redundancy. When starting a multi-node cluster, it is necessary to specify one Consul neighbor for a node after which Consul members use the gossip protocol to communicate with themselves, elect a leader and create a working cluster. Consul can be installed as an application software or as a Docker Container. For this blog, I will use single node Consul Docker Container.

Following command starts Consul Docker Container:

docker run -d -p 8500:8500 -p 172.17.0.1:53:8600/udp -p 8400:8400 gliderlabs/consul-server -node myconsul -bootstrap

Following are some notes on the above command:

- Within Consul, port 8400 is used for RPC, 8500 is used for HTTP, 8600 is used for DNS. By using “-p” option, we are exposing these ports to the host machine.

- “172.17.0.1” is the Docker bridge IP address. We are remapping Consul Container’s port 8600 to host machine’s Docker bridge port 53 so that Containers on that host can use Consul for DNS.

- “bootstrap” option is used to operate Consul in standalone mode.

Following is the Consul version that I am running. This output is inside Consul Container.

# consul --version Consul v0.6.3

Following output shows the HTTP REST api example listing the active nodes:

$ curl -s http://localhost:8500/v1/catalog/nodes | jq .

[

{

"ModifyIndex": 4,

"CreateIndex": 3,

"Address": "172.17.0.2",

"Node": "myconsul"

}

]

Following output shows that no active services are running:

$ curl -s http://localhost:8500/v1/catalog/services | jq .

{

"consul": []

}

Example Application used in this blog

For this blog, we will use the following application.

Following are some notes on the above application:

- Two nginx containers will serve as the web servers. ubuntu container will serve as http client.

- Consul will load balance the request between two nginx web servers.

- DNS and health check will be provided by Consul.

- We will first try this application with manual service registration and later use dynamic service registration using gliderlabs registrator.

Following are some pre-requisites:

To allow Docker Containers to use Docker bridge as the DNS IP address, we need to add the following to Docker start options. For Ubuntu 14.04, we need to specify the following options in “/etc/default/docker”.

DOCKER_OPTS="--dns 172.17.0.1 --dns 8.8.8.8 --dns-search service.consul"

“172.17.0.1” is the Docker bridge IP address in my case. “dns-search” option allows us to specify the default domain name. When Docker startup options are changed, it is needed to restart Docker engine.

Example application using manual registration

Application with no health check

First, lets start the 3 Docker Containers comprising the application:

docker run -d -P --name=nginx1 nginx docker run -d -P --name=nginx2 nginx docker run -ti smakam/myubuntu:v3 sh

Following output shows all running Containers, including 3 application Containers as well as Consul:

$ docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 75663b0a2a9f smakam/myubuntu:v3 "sh" 4 seconds ago Up 3 seconds grave_mahavira 167bb121198e nginx "nginx -g 'daemon off" 42 seconds ago Up 41 seconds 0.0.0.0:32821->80/tcp, 0.0.0.0:32820->443/tcp nginx2 0ea2573fc764 nginx "nginx -g 'daemon off" 44 seconds ago Up 43 seconds 0.0.0.0:32819->80/tcp, 0.0.0.0:32818->443/tcp nginx1 e27c1d7f63db gliderlabs/consul-server "/bin/consul agent -s" About an hour ago Up About an hour 0.0.0.0:8400->8400/tcp, 8300-8302/tcp, 8600/tcp, 8301-8302/udp, 0.0.0.0:8500->8500/tcp, 172.17.0.1:53->8600/udp thirsty_albattani

Let’s register these 2 services manually:

http1.json:

{

"ID": "http1",

"Name": "http",

"Address": "172.17.0.3",

"Port": 80

}

http2.json:

{

"ID": "http2",

"Name": "http",

"Address": "172.17.0.4",

"Port": 80

}

curl -X PUT --data-binary @http1.json http://localhost:8500/v1/agent/service/register

curl -X PUT --data-binary @http2.json http://localhost:8500/v1/agent/service/register

Now let’s look at the Consul service registry. We can see below that the service name “http” is composed of 2 web servers(172.17.0.3:80, 172.17.0.4:80)

$ curl -s http://localhost:8500/v1/catalog/service/http | jq .

[

{

"ModifyIndex": 372,

"CreateIndex": 372,

"Node": "myconsul",

"Address": "172.17.0.2",

"ServiceID": "http1",

"ServiceName": "http",

"ServiceTags": [],

"ServiceAddress": "172.17.0.3",

"ServicePort": 80,

"ServiceEnableTagOverride": false

},

{

"ModifyIndex": 373,

"CreateIndex": 373,

"Node": "myconsul",

"Address": "172.17.0.2",

"ServiceID": "http2",

"ServiceName": "http",

"ServiceTags": [],

"ServiceAddress": "172.17.0.4",

"ServicePort": 80,

"ServiceEnableTagOverride": false

}

]

Now, let’s look at DNS database. This shows both the web servers that are part of “http” service.

$ dig @172.17.0.1 http.service.consul ; <<>> DiG 9.9.5-3ubuntu0.7-Ubuntu <<>> @172.17.0.1 http.service.consul ; (1 server found) ;; global options: +cmd ;; Got answer: ;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 4972 ;; flags: qr aa rd; QUERY: 1, ANSWER: 2, AUTHORITY: 0, ADDITIONAL: 0 ;; WARNING: recursion requested but not available ;; QUESTION SECTION: ;http.service.consul. IN A ;; ANSWER SECTION: http.service.consul. 0 IN A 172.17.0.3 http.service.consul. 0 IN A 172.17.0.4

We can also look at DNS service records that will show the port number associated with each service. In this case, “http” service exposes port 80.

$ dig @172.17.0.1 http.service.consul SRV ; <<>> DiG 9.9.5-3ubuntu0.7-Ubuntu <<>> @172.17.0.1 http.service.consul SRV ; (1 server found) ;; global options: +cmd ;; Got answer: ;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 34138 ;; flags: qr aa rd; QUERY: 1, ANSWER: 2, AUTHORITY: 0, ADDITIONAL: 2 ;; WARNING: recursion requested but not available ;; QUESTION SECTION: ;http.service.consul. IN SRV ;; ANSWER SECTION: http.service.consul. 0 IN SRV 1 1 80 myconsul.node.dc1.consul. http.service.consul. 0 IN SRV 1 1 80 myconsul.node.dc1.consul. ;; ADDITIONAL SECTION: myconsul.node.dc1.consul. 0 IN A 172.17.0.4 myconsul.node.dc1.consul. 0 IN A 172.17.0.3

Now, let’s try to ping the dns name and check if load balancing is happening. As we can see below, the ping request shifts between 172.17.0.3 and 172.17.0.4 for service “http.service.consul”

$ ping -c1 http.service.consul PING http.service.consul (172.17.0.4) 56(84) bytes of data. 64 bytes from 172.17.0.4: icmp_seq=1 ttl=64 time=0.105 ms --- http.service.consul ping statistics --- 1 packets transmitted, 1 received, 0% packet loss, time 0ms rtt min/avg/max/mdev = 0.105/0.105/0.105/0.000 ms sreeni@ubuntu:~/consul$ ping -c1 http.service.consul PING http.service.consul (172.17.0.3) 56(84) bytes of data. 64 bytes from 172.17.0.3: icmp_seq=1 ttl=64 time=0.110 ms --- http.service.consul ping statistics --- 1 packets transmitted, 1 received, 0% packet loss, time 0ms rtt min/avg/max/mdev = 0.110/0.110/0.110/0.000 ms

In the example above, we have registered the service manually and also there is no health check configured. Because of the manual registration, the services would stay in Consul database even if the service is removed. Because there is no health check, the service would stay in Consul database even if the service dies.

We can use the following command to deregister the services manually.

curl -X PUT http://localhost:8500/v1/agent/service/deregister/http1 curl -X PUT http://localhost:8500/v1/agent/service/deregister/http2

Application with http health check

With http based health check, Consul takes care of doing periodic http check and remove the service from registry if the health check fails. Consul provides other health check mechanisms other than http.

Following are two example services with http based health check and the example shows how to register them to Consul.

http1_checkhttp.json:

{

"ID": "http1",

"Name": "http",

"Address": "172.17.0.3",

"Port": 80,

"check": {

"http": "http://172.17.0.3:80",

"interval": "10s",

"timeout": "1s"

}

}

http2_checkhttp.json:

{

"ID": "http2",

"Name": "http",

"Address": "172.17.0.4",

"Port": 80,

"check": {

"http": "http://172.17.0.4:80",

"interval": "10s",

"timeout": "1s"

}

}

curl -X PUT --data-binary @http1_checkhttp.json http://localhost:8500/v1/agent/service/register

curl -X PUT --data-binary @http2_checkhttp.json http://localhost:8500/v1/agent/service/register

Following command shows the configured health checks and shows the status as passing.

$ curl -s http://localhost:8500/v1/health/checks/http | jq .

[

{

"ModifyIndex": 424,

"CreateIndex": 423,

"Node": "myconsul",

"CheckID": "service:http1",

"Name": "Service 'http' check",

"Status": "passing",

"Notes": "",

"Output": "",

"ServiceID": "http1",

"ServiceName": "http"

},

{

"ModifyIndex": 427,

"CreateIndex": 425,

"Node": "myconsul",

"CheckID": "service:http2",

"Name": "Service 'http' check",

"Status": "passing",

"Notes": "",

"Output": "",

"ServiceID": "http2",

"ServiceName": "http"

}

]

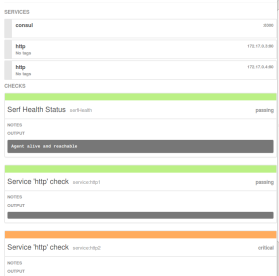

Following picture shows the Consul GUI and services with health check passing for “http” service.

To prove that the health check is working, lets kill one of the Docker containers using:

docker rm -f nginx2

Following picture shows that the health check for “http2” service is critical:

Once the health check fails, the service gets removed from service registry. Following command shows that only 1 active web server is present for “http” service now.

$ dig @172.17.0.1 http.service.consul ; <<>> DiG 9.9.5-3ubuntu0.7-Ubuntu <<>> @172.17.0.1 http.service.consul ; (1 server found) ;; global options: +cmd ;; Got answer: ;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 36858 ;; flags: qr aa rd; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 0 ;; WARNING: recursion requested but not available ;; QUESTION SECTION: ;http.service.consul. IN A ;; ANSWER SECTION: http.service.consul. 0 IN A 172.17.0.3 ;; Query time: 1 msec ;; SERVER: 172.17.0.1#53(172.17.0.1) ;; WHEN: Sat Apr 09 22:35:10 PDT 2016 ;; MSG SIZE rcvd: 72

Application with tcp based check

Following is an example service with TCP based check. Here Consul server will do a periodic TCP check to specified IP and port.

{

"ID": "http",

"Name": "http",

"Address": "172.17.0.3",

"Port": 80,

"check": {

"tcp": "172.17.0.3:80",

"interval": "10s",

"timeout": "1s"

}

}

Application using script based check

Following is an example service using script based check. Here, Consul server periodically executes the user specified script to verify the health of the service.

{

"ID": "http",

"Name": "http",

"Address": "172.17.0.3",

"Port": 80,

"check": {

"script": "curl 172.17.0.3 >/dev/null 2>&1",

"interval": "10s"

}

}

Application using TTL based check

Compared to the previous health checks which are triggered by Consul server, this health check is triggered by the service . The service needs to periodically update a shared TTL counter that Consul server maintains. If the TTL counter is not refreshed within a particular period, the service is assumed unhealthy. Following is an example service with TTL based check.

{

"ID": "web",

"Name": "web",

"Address": "172.17.0.3",

"Port": 80,

"check": {

"Interval": "10s",

"TTL": "15s"

}

}

To manually trigger TTL based keepalive update, we can do the following:

curl http://localhost:8500/v1/agent/check/pass/service:http1 curl http://localhost:8500/v1/agent/check/pass/service:http2

Example application with automatic registration using Registrator

Manual registration with Consul is error-prone and it can be overcome by an application like Gliderlabs Registrator. Registrator listens for Docker events and dynamically updates Consul service registry.

Choosing the IP address for the registration is critical. There are 2 choices:

- With internal IP option, Container IP and port number gets registered with Consul. This approach is useful when we want to access the service registry from within a Container. Following is an example of starting Registrator using “internal” IP option.

docker run -d -v /var/run/docker.sock:/tmp/docker.sock --net=host gliderlabs/registrator -internal consul://localhost:8500

- With external IP option, host IP and port number gets registered with Consul. Its necessary to specify IP address manually. If its not specified, loopback address gets registered. Following is an example of starting Registrator using “external” IP option.

docker run -d -v /var/run/docker.sock:/tmp/docker.sock gliderlabs/registrator -ip 192.168.99.100 consul://192.168.99.100:8500

First, let’s start Consul server and Registrator:

docker run -d -p 8500:8500 -p 172.17.0.1:53:8600/udp -p 8400:8400 gliderlabs/consul-server -node myconsul -bootstrap docker run -d -v /var/run/docker.sock:/tmp/docker.sock --net=host gliderlabs/registrator -internal consul://localhost:8500

Let’s start the 2 nginx services with service name as “http” and with http based health checks enabled.

docker run -d -p :80 -e "SERVICE_80_NAME=http" -e "SERVICE_80_ID=http1" -e "SERVICE_80_CHECK_HTTP=true" -e "SERVICE_80_CHECK_HTTP=/" --name=nginx1 nginx docker run -d -p :80 -e "SERVICE_80_NAME=http" -e "SERVICE_80_ID=http2" -e "SERVICE_80_CHECK_HTTP=true" -e "SERVICE_80_CHECK_HTTP=/" --name=nginx2 nginx

In the above example, we have passed environment variables that Registrator will use when registering the service with Consul.

Let’s look at the service details now:

$ curl -s http://localhost:8500/v1/catalog/service/http | jq .

[

{

"ModifyIndex": 20,

"CreateIndex": 16,

"Node": "myconsul",

"Address": "172.17.0.2",

"ServiceID": "http1",

"ServiceName": "http",

"ServiceTags": [],

"ServiceAddress": "172.17.0.3",

"ServicePort": 80,

"ServiceEnableTagOverride": false

},

{

"ModifyIndex": 19,

"CreateIndex": 17,

"Node": "myconsul",

"Address": "172.17.0.2",

"ServiceID": "http2",

"ServiceName": "http",

"ServiceTags": [],

"ServiceAddress": "172.17.0.4",

"ServicePort": 80,

"ServiceEnableTagOverride": false

}

]

As we can see above, “http” service is composed of “http1” with “172.17.0.3:80” and “http2” with “172.17.0.4:80” service.

We can look at DNS details to confirm the same:

$ dig @172.17.0.1 http.service.consul SRV ; <<>> DiG 9.9.5-3ubuntu0.7-Ubuntu <<>> @172.17.0.1 http.service.consul SRV ; (1 server found) ;; global options: +cmd ;; Got answer: ;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 34138 ;; flags: qr aa rd; QUERY: 1, ANSWER: 2, AUTHORITY: 0, ADDITIONAL: 2 ;; WARNING: recursion requested but not available ;; QUESTION SECTION: ;http.service.consul. IN SRV ;; ANSWER SECTION: http.service.consul. 0 IN SRV 1 1 80 myconsul.node.dc1.consul. http.service.consul. 0 IN SRV 1 1 80 myconsul.node.dc1.consul. ;; ADDITIONAL SECTION: myconsul.node.dc1.consul. 0 IN A 172.17.0.4 myconsul.node.dc1.consul. 0 IN A 172.17.0.3

Following example shows service registration with TTL based check enabled for service exposing port 80.

docker run -d -p :80 -e "SERVICE_80_NAME=http" -e "SERVICE_80_ID=http1" -e "SERVICE_80_CHECK_TTL=30s" --name=nginx1 nginx docker run -d -p :80 -e "SERVICE_80_NAME=http" -e "SERVICE_80_ID=http2" -e "SERVICE_80_CHECK_TTL=30s" --name=nginx2 nginx

As we can see, Consul with Registrator makes the Service discovery process dynamic and easy to manage.

References

- Service discovery overview

- Modern Service discovery with Docker

- Service discovery with Consul and Registrator

- Dockerized Service discovery with Consul

- Service discovery in Microservices architecture

- Consul Service discovery and health check for Microservices

- Consul webpage

- Consul Docker Container

- Gliderlabs Registrator

- Gliderlabs Registrator webpage

Very nice introductory post on a tricky topic. Thank you.

Thanks for the feedback

Very nice post to understand consul dns load balancing . I want to work it on for advance scenarios . I am trying to access this domain name from different host within a same network and it resolving too . but it’s not pinging .

can you help me ..?

Does the ping work without dns name?

Is this overlay network across containers in the 2 hosts?

no ping is not working without dnsname . their is no overlay network , both containers are on same host (i. e. my vm on xen server) and I am trying to access it from my laptop (os is ubuntu 16). I used dnsmasq for domain name resolution .

This article is really thorough, and I got a lot out of it. Worth a note though that on OS X apparently there is no bridge address which meant the example failed out of the gate 😦

What will happen if there are dynamic ports for one particular service ? How can we able to balance the load amongst them ? How can we able to connect the services ?

Nice post thanks for sharing

Do have any blog how to step consul (consul with cluster and client )with swarm manager.any docker compose file